With the amount of new subnets being added it can be hard to get up to date information across all subnets, so data may be slightly out of date from time to time

Vidaio is an open-source video processing subnet focused on AI-driven video upscaling (and soon compression/streaming). Its mission is to “democratise video enhancement through decentralisation, artificial intelligence, and blockchain technology”, providing creators and businesses with scalable, affordable, high-quality video processing. The Vidaio team emphasizes a decentralized, community-led approach: the project’s code is fully open-source and built atop Bittensor’s merit-based model. By leveraging Bittensor, Vidaio can tap into a distributed pool of GPUs and reward contributors via on-chain incentives, ensuring continual improvement of its AI models.

Vidaio uses a number of deep learning models to enhance low-resolution videos by predicting and generating high-resolution details. Unlike traditional upscaling methods, which rely on interpolation, their AI-based techniques analyze patterns, textures, and edges to reconstruct sharper and more realistic frames. Vidaio can instantly adapt to growing demand making it a viable solution for businesses of all sizes and eliminates a single point of control, enhancing security and censorship resistance.

Vidaio is an open-source video processing subnet focused on AI-driven video upscaling (and soon compression/streaming). Its mission is to “democratise video enhancement through decentralisation, artificial intelligence, and blockchain technology”, providing creators and businesses with scalable, affordable, high-quality video processing. The Vidaio team emphasizes a decentralized, community-led approach: the project’s code is fully open-source and built atop Bittensor’s merit-based model. By leveraging Bittensor, Vidaio can tap into a distributed pool of GPUs and reward contributors via on-chain incentives, ensuring continual improvement of its AI models.

Vidaio uses a number of deep learning models to enhance low-resolution videos by predicting and generating high-resolution details. Unlike traditional upscaling methods, which rely on interpolation, their AI-based techniques analyze patterns, textures, and edges to reconstruct sharper and more realistic frames. Vidaio can instantly adapt to growing demand making it a viable solution for businesses of all sizes and eliminates a single point of control, enhancing security and censorship resistance.

Vidaio follows the standard Bittensor subnet design with miners and validators cooperating via two “synapses” (query types).

Products & Services

The product is a decentralized video processing solution offered through the Bit Tensor network, which is designed to address the increasing demand for high-quality, low-cost video processing tools. This subnet provides two core services:

AI-Driven Video Upscaling: It enhances lower-resolution video files (e.g., SD to HD or 4K) using machine learning models that predict the correct pixel values for higher resolution output. This allows old or low-quality content (such as SD footage from the ’90s) to be transformed into HD or even 4K video, making it more suitable for modern devices and platforms. This service is already live in a beta phase and has shown great success with positive community feedback.

AI-Driven Video Compression: This service focuses on reducing video file sizes without compromising visual quality. The compression model analyzes the content, applies optimized encoding, and eliminates redundancies to achieve significant reductions in file size (up to 80% smaller), while maintaining the same perceptual quality. Unlike traditional methods, the model adapts the compression process based on the unique characteristics of the video (e.g., dynamic motion or static scenes) to achieve optimal results. This is set to revolutionize video delivery, especially in content-heavy industries like streaming, by drastically lowering storage and bandwidth costs.

Technical Architecture

Vidaio’s tech stack combines decentralized blockchain components with AI/ML video pipelines. Core elements include:

Vidaio follows the standard Bittensor subnet design with miners and validators cooperating via two “synapses” (query types).

Products & Services

The product is a decentralized video processing solution offered through the Bit Tensor network, which is designed to address the increasing demand for high-quality, low-cost video processing tools. This subnet provides two core services:

AI-Driven Video Upscaling: It enhances lower-resolution video files (e.g., SD to HD or 4K) using machine learning models that predict the correct pixel values for higher resolution output. This allows old or low-quality content (such as SD footage from the ’90s) to be transformed into HD or even 4K video, making it more suitable for modern devices and platforms. This service is already live in a beta phase and has shown great success with positive community feedback.

AI-Driven Video Compression: This service focuses on reducing video file sizes without compromising visual quality. The compression model analyzes the content, applies optimized encoding, and eliminates redundancies to achieve significant reductions in file size (up to 80% smaller), while maintaining the same perceptual quality. Unlike traditional methods, the model adapts the compression process based on the unique characteristics of the video (e.g., dynamic motion or static scenes) to achieve optimal results. This is set to revolutionize video delivery, especially in content-heavy industries like streaming, by drastically lowering storage and bandwidth costs.

Technical Architecture

Vidaio’s tech stack combines decentralized blockchain components with AI/ML video pipelines. Core elements include:

Vidaio is developed by a multi-disciplinary team of industry professionals (names as listed on the website). Key members include:

Gareth Howells – Founder. Gareth has 20 years of experience in the product and operations field, having worked with major companies like Netflix, Disney, Sony, Spotify, Hulu, and even Pokémon. His expertise lies in understanding market needs and product development. Gareth is particularly focused on taking this product to market and solving real-world problems for content owners and video platforms.

Ahmad Ayad – Machine Learning Engineer (AI/ML specialist). Ahmed is the machine learning expert who primarily focuses on the video compression model. With a PhD in distributed learning, he has been with the team since its inception. Ahmed’s work involves using machine learning models to enhance the efficiency of video compression and apply pre-processing to reduce video artifacts before compression, ensuring better visual quality. His work in video compression has been groundbreaking and forms the core of the product’s capabilities.

Gopi Jayaraman – Video Technology Expert (AV/multimedia veteran).

Medfil D – Subnet Developer (backend & AI/ML engineer).

Chinaza – UI/UX Designer.

Akinwunmi Aguda – Frontend Developer.

Marcus “mogmachine” Graichen – Angel Advisor (cryptoinvestor and Bittensor community figure).

Many of the above contributors have public profiles (e.g. Marcus Graichen is known as “mogmachine” on Bittensor forums/X), and the GitHub repo shows activity by the Vidaio org. The project maintains an active presence on social media and forums: the official X (Twitter) handle is @vidaio_τ (declared as “open-source, decentralized video processing, subnet 85 on Bittensor” in the bio), and a community Discord is open via the invite on their site. All code and documentation are published on GitHub (confirmed by project links on their Medium blog), and the team engages with the Bittensor community for announcements and support.

Vidaio is developed by a multi-disciplinary team of industry professionals (names as listed on the website). Key members include:

Gareth Howells – Founder. Gareth has 20 years of experience in the product and operations field, having worked with major companies like Netflix, Disney, Sony, Spotify, Hulu, and even Pokémon. His expertise lies in understanding market needs and product development. Gareth is particularly focused on taking this product to market and solving real-world problems for content owners and video platforms.

Ahmad Ayad – Machine Learning Engineer (AI/ML specialist). Ahmed is the machine learning expert who primarily focuses on the video compression model. With a PhD in distributed learning, he has been with the team since its inception. Ahmed’s work involves using machine learning models to enhance the efficiency of video compression and apply pre-processing to reduce video artifacts before compression, ensuring better visual quality. His work in video compression has been groundbreaking and forms the core of the product’s capabilities.

Gopi Jayaraman – Video Technology Expert (AV/multimedia veteran).

Medfil D – Subnet Developer (backend & AI/ML engineer).

Chinaza – UI/UX Designer.

Akinwunmi Aguda – Frontend Developer.

Marcus “mogmachine” Graichen – Angel Advisor (cryptoinvestor and Bittensor community figure).

Many of the above contributors have public profiles (e.g. Marcus Graichen is known as “mogmachine” on Bittensor forums/X), and the GitHub repo shows activity by the Vidaio org. The project maintains an active presence on social media and forums: the official X (Twitter) handle is @vidaio_τ (declared as “open-source, decentralized video processing, subnet 85 on Bittensor” in the bio), and a community Discord is open via the invite on their site. All code and documentation are published on GitHub (confirmed by project links on their Medium blog), and the team engages with the Bittensor community for announcements and support.

The roadmap for the subnet outlines the key stages of development, focusing on scaling and enhancing its capabilities over time.

Upscaling: Currently live in beta, the upscaling feature allows content owners and video platforms to send low-resolution videos to miners for enhancement. This service is actively being tested and refined, with the team using industry-standard metrics (like VMAF and PI app score) to measure the perceptual quality of the upscaled videos. The upscaling service has already gained significant positive feedback from the community, though the team is working to improve the quality further.

Video Compression: The compression model is expected to be launched soon, and it promises to revolutionize video storage and bandwidth consumption. By utilizing advanced AI, the compression service can reduce video file sizes by up to 80% without sacrificing perceptual quality. This will have a significant impact on industries that handle massive amounts of video data, including content delivery networks (CDNs) and video on demand (VOD) platforms.

Future Developments:

Go-to-Market Strategy:

The video subnet is positioned to tackle the $400 billion market for video processing, which includes sectors like streaming, cloud storage, and video post-production. The technology will be used in various industries such as surveillance, medical imaging, and industrial video applications. This makes it a versatile solution with the potential to scale across a broad range of markets.

The roadmap for the subnet outlines the key stages of development, focusing on scaling and enhancing its capabilities over time.

Upscaling: Currently live in beta, the upscaling feature allows content owners and video platforms to send low-resolution videos to miners for enhancement. This service is actively being tested and refined, with the team using industry-standard metrics (like VMAF and PI app score) to measure the perceptual quality of the upscaled videos. The upscaling service has already gained significant positive feedback from the community, though the team is working to improve the quality further.

Video Compression: The compression model is expected to be launched soon, and it promises to revolutionize video storage and bandwidth consumption. By utilizing advanced AI, the compression service can reduce video file sizes by up to 80% without sacrificing perceptual quality. This will have a significant impact on industries that handle massive amounts of video data, including content delivery networks (CDNs) and video on demand (VOD) platforms.

Future Developments:

Go-to-Market Strategy:

The video subnet is positioned to tackle the $400 billion market for video processing, which includes sectors like streaming, cloud storage, and video post-production. The technology will be used in various industries such as surveillance, medical imaging, and industrial video applications. This makes it a versatile solution with the potential to scale across a broad range of markets.

A big thank you to Tao Stats for producing these insightful videos in the Novelty Search series. We appreciate the opportunity to dive deep into the groundbreaking work being done by Subnets within Bittensor! Check out some of their other videos HERE.

In this session, the team behind Vidaio provides an in-depth overview of their AI-powered video processing solution. They discuss the challenges facing video content owners and streaming platforms, particularly rising storage and bandwidth costs due to the explosive growth in video content online. The team introduces their core offerings: AI-driven video upscaling to enhance low-resolution videos to HD or 4K and AI-based video compression to reduce file sizes by up to 80% while maintaining perceptual quality. With a focus on solving real-world problems, they explain how the subnet is built on the Bittensor network to allow miners to participate in the processing tasks. The session also highlights the team’s expertise, their roadmap for the subnet’s future developments, and their strategic vision for partnerships and collaborations in the video processing space. The potential impact of this technology is significant, particularly in emerging markets where video delivery and storage solutions are critical.

Novelty Search is great, but for most investors trying to understand Bittensor, the technical depth is a wall, not a bridge. If we’re going to attract investment into this ecosystem then we need more people to understand it! That’s why Siam Kidd and Mark Creaser from DSV Fund have launched Revenue Search, where they ask the simple questions that investors want to know the answers to.

Recorded in July 2025, this episode of Revenue Search features Gareth Howells, founder of Vidaio, the first Bittensor subnet dedicated to AI-powered video compression and upscaling. With 25+ years of experience in video backend systems, Gareth explains how Vidaio is solving major industry pain points: reducing file sizes by up to 80% without perceptible quality loss, and upscaling old low-res footage to crisp 4K using AI.

The compression service—targeting VOD platforms, telecoms, and CDNs—offers massive savings in storage, bandwidth, and battery use, especially crucial for emerging markets. While upscaling has a niche use case (e.g., content archives), Gareth confirms 90% of future revenue will stem from compression. Vidaio plans to charge enterprise clients in fiat via API integrations, with revenue cycling back to support the subnet’s alpha token. Trusted Execution Environments and encryption tech are on the roadmap to ease enterprise security concerns. Compression testing begins within days, with a full market launch expected in under 90 days. Gareth’s vision is clear: deliver better video, cheaper, and redefine how the world experiences content.

Vidaio (Subnet 85) does AI video upscaling (SD → HD/4K) and compression (dramatically smaller files with similar perceived quality). Their consumer web app is live in beta—demo showed ~95% size reduction—with paid tiers coming (think ~$0.05/min, ~75% margins). Beyond creators, the big targets are streamers/broadcasters, legacy libraries, security/medical, and autonomous fleets—anyone drowning in storage/CDN costs. Near-term roadmap adds a streaming pipeline (auto encoding ladders + Hippius storage), plus R&D on colorization and selective video generation/inpainting.

For enterprises needing NDAs and tighter control, Vidaio introduced an Enterprise Track: vetted “Elite miners” execute jobs off-subnet; clients pay fiat split roughly ~75% to miners / ~25% to Vidaio. Miners must post an AlphaBond—locking ~50% of their fiat payout equivalent in ALPHA until client acceptance—creating ALPHA demand/lockups while miners get fiat to cover infra (less sell pressure). Vidaio’s cut first covers OPEX, with surplus flexed between buybacks, product, and growth. Benchmark goals: surpass Topaz Labs (~$8.3M/yr consumer) and Visionular (~$10.5M/yr enterprise) while keeping the subnet as the innovation engine.

We continue to explore new markets with strong use cases for Vidaio.

Next up, we have Autonomous Vehicles, a fascinating and exploding sector with a huge need for our services!

(I'm sure it won't be long until we see an Autonomous Vehicle subnet!)

Happy reading, and let us…

Hot cooking … 🍜🍜🍜

@vidaio_ #SN85 #bittensor

We cannot wait for the release of this!

Exploring New Markets!

While entertainment is our first love, the medical imaging market is a multibillion-dollar, video-dense space. In our latest blog post, we do a deep dive into how we are perfectly placed to be the catalyst for change here.

Happy Reading!…

We are super excited to be speaking at this event:

and sharing the stage with some legendary subnets!

Thanks @SiamKidd, for the opportunity!

On Sat 22nd Nov in the UK I'm holding a very special event. One that I haven't looked forward to as much as this for ages.

It's for sophisticated investors, SSAS pension holders and business owners.

Global currency expansion (Central Banks printing currency) is a stealth tax…

We are booked in for the revenue search with @SiamKidd and @MarkCreaser on 21st Oct, @mogmachine will be in attendance!

You can check out our first revenue search here: We've made a lot of progress with the subnet since then, but our addressable market…

Fun price action on @vidaio_ today. No idea what it was about, just know I was super happy to sweep up even more.

@mogmachine announced they will be on @dsvfund Revenue Search in a few weeks time.

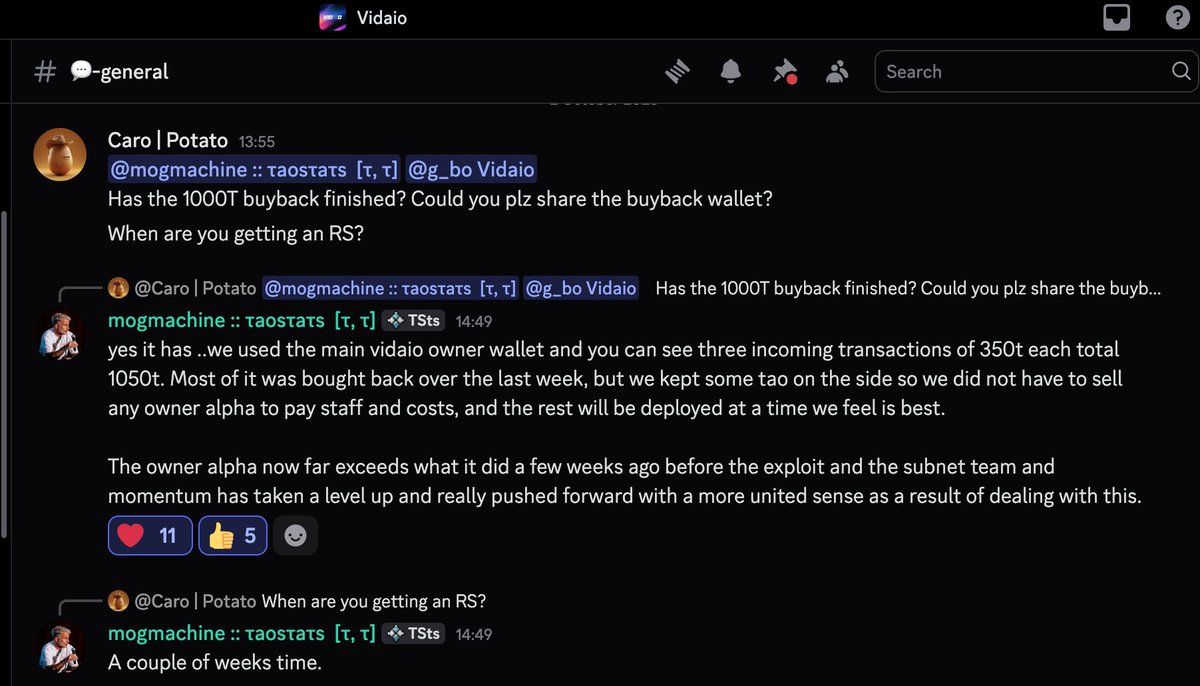

Don't fade a team that lost $300k in alpha and just bought it all back and a…