With the amount of new subnets being added it can be hard to get up to date information across all subnets, so data may be slightly out of date from time to time

This subnet uses a distributed approach to train Large Language Models on web-based datasets. Their proposed solution is a subnet that incentivizes compute, bandwidth, and latency. Compute resources drive the training of each miner’s local model, while bandwidth and latency facilitate the averaging of local model weights using a process called butterfly all-reduce. Once this process is completed, every miner receives a unified global averaged gradient to update their model weights.

This subnet uses a distributed approach to train Large Language Models on web-based datasets. Their proposed solution is a subnet that incentivizes compute, bandwidth, and latency. Compute resources drive the training of each miner’s local model, while bandwidth and latency facilitate the averaging of local model weights using a process called butterfly all-reduce. Once this process is completed, every miner receives a unified global averaged gradient to update their model weights.

Training Process:

Miners train the collective model on specific dataset segments. The training is iterative, with both local and global tracking of epochs and steps. Miners perform local training on their assigned data and participate in gradient averaging using the butterfly all-reduce method.

Dataset:

The subnet utilizes the “HuggingFaceFW/fineweb” dataset with the “sample-350BT” configuration.

Data is streamed in real-time from Hugging Face servers for efficient large-scale data handling.

Text is tokenized with the GPT-2 tokenizer (“distilgpt2”).

Model Submission:

After each gradient averaging step, miners push the updated model to the Hugging Face Hub.

The model is tagged with the current epoch number.

In case of upload failure, the system retries within a set limit.

Validation:

Validators perform two main queries: “Train” and “AllReduce.”

For “Train” queries, validators check miners’ loss, gradients, and dataset indices.

For “AllReduce” queries, they initiate gradient averaging and verify miner participation.

Incentive Mechanism:

Bandwidth Score: Measures miners’ efficiency in sharing model states.

Gradient Score: Compares miner-reported gradients to validator-calculated gradients.

Steps Score: Rewards miners based on the volume of data trained in each step.

Training Process:

Miners train the collective model on specific dataset segments. The training is iterative, with both local and global tracking of epochs and steps. Miners perform local training on their assigned data and participate in gradient averaging using the butterfly all-reduce method.

Dataset:

The subnet utilizes the “HuggingFaceFW/fineweb” dataset with the “sample-350BT” configuration.

Data is streamed in real-time from Hugging Face servers for efficient large-scale data handling.

Text is tokenized with the GPT-2 tokenizer (“distilgpt2”).

Model Submission:

After each gradient averaging step, miners push the updated model to the Hugging Face Hub.

The model is tagged with the current epoch number.

In case of upload failure, the system retries within a set limit.

Validation:

Validators perform two main queries: “Train” and “AllReduce.”

For “Train” queries, validators check miners’ loss, gradients, and dataset indices.

For “AllReduce” queries, they initiate gradient averaging and verify miner participation.

Incentive Mechanism:

Bandwidth Score: Measures miners’ efficiency in sharing model states.

Gradient Score: Compares miner-reported gradients to validator-calculated gradients.

Steps Score: Rewards miners based on the volume of data trained in each step.

Starting the year incredibly grateful to our Open Source contributors.

Over the holidays, while working on a PR to migrate our Mechanism 0 DataLoader to R2, @jorritvangils spotted a critical bug in our miner code. He quickly merged a fix (PR #87: ) to

Fix dataloader and blocklist block mismatch by JorritvanGils · Pull Request #87 · dstrbtd/Distrib...

Currently, self.current_block is updated continuously, causing a mismatch between the value passed to DatasetLoader.next_pag...

github.com

Last Friday, we launched Mechanism 1 on Subnet 38's main-net! 🚀

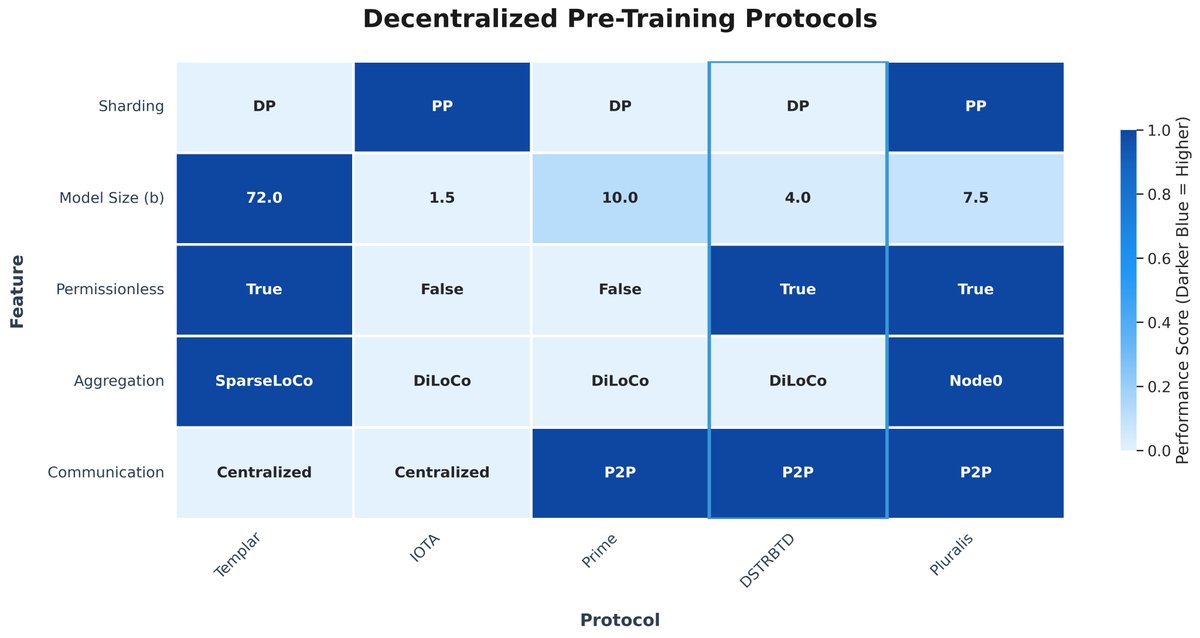

Mechanism 1 is a winner-takes-all mechanism that aims to incentivise miners to develop SOTA distributed training strategies (see the "Aggregation" row in the heat-map in the attached post).

These optimised

Decentralized pre-training has accelerated rapidly over the past year, with multiple teams running public experiments each taking a different approach to the same problem.

Here is a high-level comparison across sharding strategy, permissions, model scale, aggregation, and

Decentralized pre-training has accelerated rapidly over the past year, with multiple teams running public experiments each taking a different approach to the same problem.

Here is a high-level comparison across sharding strategy, permissions, model scale, aggregation, and

It's worth noting that there are also excellent teams like Nous Research, Grail and Gensyn working on decentralized post-training.

This thread focuses specifically on decentralized pre-training, where the size and type of information being shared are quite different. Both

If we’ve missed out any other public decentralized pre-training efforts, we’d love for people to share them with us.

Especially interested in protocols exploring novel aggregation techniques, compression algorithms or incentive mechanisms.

A question we often get from members of our community is: "in layman's terms what is DSTRBTD's long term vision?"

Put simply, its building community owned artificial intelligence.

Right now, the world’s most powerful AI is owned and controlled by a small number of large

DSTRBTD’s Run 4 is our most stable attempt to date at training a 4B parameter model in a fully permission-less, trust-less and decentralised setting: https://dash.dstrbtd.ai/performance.

Over the past week, we’ve seen an average of 10 participants per AllReduce (the process of sharing

DSTRBTD's Mechanism 1 is now producing reproducible benchmarks for distributed training optimizers.

Each optimizer is evaluated in a sandbox environment that trains NanoGPT variants for 10k steps. We record:

• Final Loss

• Communication Volume

• Throughput

These metrics are