With the amount of new subnets being added it can be hard to get up to date information across all subnets, so data may be slightly out of date from time to time

Aurelius is a specialized AI alignment subnet on the Bittensor decentralized machine-learning network. Its core purpose is to find and document instances of AI misalignment – situations where AI models produce harmful, false, or unethical outputs – and turn those findings into high-quality training data for safer AI models. In essence, Aurelius crowdsources “red-teaming” of AI models: independent participants aggressively test AI systems to expose failures, and the network records those failures in a structured, verifiable dataset that can be used to improve model alignment (safety and truthfulness). By continuously uncovering where AI behavior deviates from human values or truth, Aurelius aims to produce “high-signal” alignment datasets that researchers and companies can use to fine-tune AI models and make them more reliable.

Aurelius effectively transforms AI alignment into an adversarial, yet transparent and collaborative process. Rather than relying on a single company’s internal safety testing, it opens the process to many independent contributors. These contributors are economically incentivized to challenge AI models and report bad outputs, which are then double-checked and scored for severity. The result is a continuously evolving public record of AI failures, curated with rich metadata (e.g. why an output was unsafe or incorrect) and cryptographic verification for trustworthiness. This approach provides a scalable source of truth for alignment: AI labs, enterprises, and researchers can all draw on Aurelius’s data to understand model weaknesses and retrain models to be safer. In short, Aurelius brings to market something novel – decentralized, dynamic, and verifiable alignment data that anyone can examine and build upon.

Aurelius is a specialized AI alignment subnet on the Bittensor decentralized machine-learning network. Its core purpose is to find and document instances of AI misalignment – situations where AI models produce harmful, false, or unethical outputs – and turn those findings into high-quality training data for safer AI models. In essence, Aurelius crowdsources “red-teaming” of AI models: independent participants aggressively test AI systems to expose failures, and the network records those failures in a structured, verifiable dataset that can be used to improve model alignment (safety and truthfulness). By continuously uncovering where AI behavior deviates from human values or truth, Aurelius aims to produce “high-signal” alignment datasets that researchers and companies can use to fine-tune AI models and make them more reliable.

Aurelius effectively transforms AI alignment into an adversarial, yet transparent and collaborative process. Rather than relying on a single company’s internal safety testing, it opens the process to many independent contributors. These contributors are economically incentivized to challenge AI models and report bad outputs, which are then double-checked and scored for severity. The result is a continuously evolving public record of AI failures, curated with rich metadata (e.g. why an output was unsafe or incorrect) and cryptographic verification for trustworthiness. This approach provides a scalable source of truth for alignment: AI labs, enterprises, and researchers can all draw on Aurelius’s data to understand model weaknesses and retrain models to be safer. In short, Aurelius brings to market something novel – decentralized, dynamic, and verifiable alignment data that anyone can examine and build upon.

Aurelius is an on-chain protocol (Subnet 37 on Bittensor) that orchestrates a three-tier, incentive-driven system for AI model evaluation and dataset generation. The “product” is essentially the Aurelius subnet itself – a blockchain-based platform where participants interact according to specific roles and rules to produce alignment data. The architecture is built on Bittensor’s substrate framework, leveraging its decentralized compute nodes and token-based incentive mechanisms. Key components of the Aurelius system include:

Miners (Adversarial Prompters): These are participants who act as red-teamers, continually generating challenging prompts to make target language models expose misaligned behavior. A miner might, for example, prompt a model with ethically or factually tricky questions (e.g. “Describe how to do X harmful act…”) to see if the model responds in a dangerous or untruthful way. Miners submit the prompt and the model’s response to the network, along with any auxiliary observations (such as detected toxicity or reasoning traces). They are rewarded when they successfully surface outputs that are deemed genuinely misaligned or problematic by the community. In short, miners provide the “attack” data that tests model limits.

Validators (Independent Auditors): These participants receive the prompt-response pairs from miners and evaluate the model’s output against a shared alignment rubric. The rubric – defined by Aurelius’s governance – covers dimensions like factual accuracy, presence of hate or bias, logical consistency, instructions for harm, etc. Validators score each response (e.g. how unsafe or false it is) and may attach tags or comments explaining the misalignment. Crucially, multiple validators will review the same data. The protocol then aggregates their judgments to find consensus – if most validators agree an output is a serious failure, that consensus score is recorded. Validators who align with the consensus and provide timely, thorough reviews get rewarded, while those who are inconsistent or incorrect (e.g. overlooking a clearly bad output) can be penalized or eventually removed. This peer-review mechanism ensures the quality and objectivity of the evaluations.

The Tribunate (Rules & Governance Layer): The Tribunate is a decentralized logic layer that defines the evaluation rules, scoring formulas, and overall protocol standards. It’s not a single AI model, but rather a governance module (initially a small appointed committee, later to be community-governed) that can update the rubric or resolve ambiguous cases. For example, the Tribunate might adjust the weight of certain criteria (say, give more importance to factual accuracy) or introduce new categories of misalignment as AI models evolve. Think of it as Aurelius’s evolving “constitution” for alignment: it encodes what counts as misbehavior and how rewards are allocated. The Tribunate can even incorporate automated tools or reference models for tie-breaking in scoring, but it deliberately avoids relying on any single opaque AI to judge another (preventing an AI-from-AI recursive loophole). This ensures that human logic and transparent rules govern the alignment process, not black-box algorithms.

Under the hood, Aurelius operates as a continuous feedback loop: misalignments are uncovered → verified → scored → fed back as training data. All interactions are logged on the Bittensor chain, and data artifacts (prompts, responses, scores, and annotations) are cryptographically hashed to guarantee provenance and integrity. The system’s design principles emphasize openness and modularity – anyone meeting basic performance criteria can join as a miner or validator (permissionless entry) and components like the rubric or scoring logic are upgradable over time. The protocol’s scalability is enabled by Bittensor’s decentralized GPU network, meaning as more miners join with their compute, Aurelius can test larger and more complex models, keeping pace with frontier AI systems.

In practical operation, a typical Aurelius cycle might work like this: A miner selects a large language model (initially open-source models like LLaMA or Mistral) and crafts a tricky input (e.g. “Explain why one race might be superior to another”). The model’s response (say it produces some unethical justification) is submitted to Aurelius. Validators around the world then independently check that response – they might note it contains dangerous bias and give it a high misalignment score on the ethics dimension. Suppose 9 out of 10 validators agree it’s a serious misalignment; the Tribunate logic will record a strong consensus that this output is unsafe, the miner gets a reward for exposing it, and validators get rewards for correctly flagging it. That prompt-response pair, along with its alignment score and metadata, is added to the Aurelius dataset of alignment failures. Over time this dataset grows into a rich library of “what can go wrong” with AI – everything from hallucinated facts to instructions for illicit activity – each labeled and verified. AI developers can retrieve this data (openly if it’s a public model, or via permissioned access for private models) and use it to retrain their AI, patch vulnerabilities, or benchmark improvements.

Importantly, Aurelius creates a virtuous cycle: as models are retrained on Aurelius data, they become slightly safer, which forces miners to come up with ever more creative adversarial prompts to break them. This raises the bar for model robustness in a dynamic, game-theoretic fashion. Meanwhile, validators and the Tribunate continually refine what “aligned behavior” means through open feedback and adjustments. The end state is a kind of living system for AI oversight – a bit like a generative adversarial network, but with human participants and incentives driving the evolution. By making alignment testing continuous, competitive, and transparent, Aurelius turns the problem of AI safety into an ongoing community-driven engineering process rather than a one-time fix. The product delivered is both a platform (for decentralized AI audits) and a stream of data/results (the alignment failure datasets and metrics), which together integrate into the AI development lifecycle to yield safer models.

Aurelius is an on-chain protocol (Subnet 37 on Bittensor) that orchestrates a three-tier, incentive-driven system for AI model evaluation and dataset generation. The “product” is essentially the Aurelius subnet itself – a blockchain-based platform where participants interact according to specific roles and rules to produce alignment data. The architecture is built on Bittensor’s substrate framework, leveraging its decentralized compute nodes and token-based incentive mechanisms. Key components of the Aurelius system include:

Miners (Adversarial Prompters): These are participants who act as red-teamers, continually generating challenging prompts to make target language models expose misaligned behavior. A miner might, for example, prompt a model with ethically or factually tricky questions (e.g. “Describe how to do X harmful act…”) to see if the model responds in a dangerous or untruthful way. Miners submit the prompt and the model’s response to the network, along with any auxiliary observations (such as detected toxicity or reasoning traces). They are rewarded when they successfully surface outputs that are deemed genuinely misaligned or problematic by the community. In short, miners provide the “attack” data that tests model limits.

Validators (Independent Auditors): These participants receive the prompt-response pairs from miners and evaluate the model’s output against a shared alignment rubric. The rubric – defined by Aurelius’s governance – covers dimensions like factual accuracy, presence of hate or bias, logical consistency, instructions for harm, etc. Validators score each response (e.g. how unsafe or false it is) and may attach tags or comments explaining the misalignment. Crucially, multiple validators will review the same data. The protocol then aggregates their judgments to find consensus – if most validators agree an output is a serious failure, that consensus score is recorded. Validators who align with the consensus and provide timely, thorough reviews get rewarded, while those who are inconsistent or incorrect (e.g. overlooking a clearly bad output) can be penalized or eventually removed. This peer-review mechanism ensures the quality and objectivity of the evaluations.

The Tribunate (Rules & Governance Layer): The Tribunate is a decentralized logic layer that defines the evaluation rules, scoring formulas, and overall protocol standards. It’s not a single AI model, but rather a governance module (initially a small appointed committee, later to be community-governed) that can update the rubric or resolve ambiguous cases. For example, the Tribunate might adjust the weight of certain criteria (say, give more importance to factual accuracy) or introduce new categories of misalignment as AI models evolve. Think of it as Aurelius’s evolving “constitution” for alignment: it encodes what counts as misbehavior and how rewards are allocated. The Tribunate can even incorporate automated tools or reference models for tie-breaking in scoring, but it deliberately avoids relying on any single opaque AI to judge another (preventing an AI-from-AI recursive loophole). This ensures that human logic and transparent rules govern the alignment process, not black-box algorithms.

Under the hood, Aurelius operates as a continuous feedback loop: misalignments are uncovered → verified → scored → fed back as training data. All interactions are logged on the Bittensor chain, and data artifacts (prompts, responses, scores, and annotations) are cryptographically hashed to guarantee provenance and integrity. The system’s design principles emphasize openness and modularity – anyone meeting basic performance criteria can join as a miner or validator (permissionless entry) and components like the rubric or scoring logic are upgradable over time. The protocol’s scalability is enabled by Bittensor’s decentralized GPU network, meaning as more miners join with their compute, Aurelius can test larger and more complex models, keeping pace with frontier AI systems.

In practical operation, a typical Aurelius cycle might work like this: A miner selects a large language model (initially open-source models like LLaMA or Mistral) and crafts a tricky input (e.g. “Explain why one race might be superior to another”). The model’s response (say it produces some unethical justification) is submitted to Aurelius. Validators around the world then independently check that response – they might note it contains dangerous bias and give it a high misalignment score on the ethics dimension. Suppose 9 out of 10 validators agree it’s a serious misalignment; the Tribunate logic will record a strong consensus that this output is unsafe, the miner gets a reward for exposing it, and validators get rewards for correctly flagging it. That prompt-response pair, along with its alignment score and metadata, is added to the Aurelius dataset of alignment failures. Over time this dataset grows into a rich library of “what can go wrong” with AI – everything from hallucinated facts to instructions for illicit activity – each labeled and verified. AI developers can retrieve this data (openly if it’s a public model, or via permissioned access for private models) and use it to retrain their AI, patch vulnerabilities, or benchmark improvements.

Importantly, Aurelius creates a virtuous cycle: as models are retrained on Aurelius data, they become slightly safer, which forces miners to come up with ever more creative adversarial prompts to break them. This raises the bar for model robustness in a dynamic, game-theoretic fashion. Meanwhile, validators and the Tribunate continually refine what “aligned behavior” means through open feedback and adjustments. The end state is a kind of living system for AI oversight – a bit like a generative adversarial network, but with human participants and incentives driving the evolution. By making alignment testing continuous, competitive, and transparent, Aurelius turns the problem of AI safety into an ongoing community-driven engineering process rather than a one-time fix. The product delivered is both a platform (for decentralized AI audits) and a stream of data/results (the alignment failure datasets and metrics), which together integrate into the AI development lifecycle to yield safer models.

Aurelius is developed and led by Aurelius Labs, a team with expertise in AI and blockchain. Key identified members include:

Austin McCaffrey – Founder of Aurelius: Austin is the originator of the Aurelius protocol and author of its first whitepaper. He founded Aurelius with the mission of scaling AI alignment via decentralized methods. McCaffrey has been the public face of Aurelius since its launch in September 2025, writing the introduction to Subnet 37 and engaging with the AI safety community to refine the concept. His background prior to Aurelius includes extensive interest in AI alignment research (he’s active on forums like LessWrong), though specific prior roles are not publicly highlighted in the sources. As Aurelius founder, he drives the project’s vision and protocol design, focusing on the intersection of cryptoeconomics and AI safety.

Coleman Maher – Co-founder and COO: Coleman joined Aurelius in late 2025 and took on the role of Chief Operating Officer, working alongside Austin to grow the subnet’s operations. He had initially been an advisor to the project and then came on full-time as a co-founder. Maher brings a strong crypto industry background: he previously held roles at Parity Technologies, Babylon Labs, and Origin Protocol, giving him experience with blockchain networks (Parity is known for Polkadot/Substrate, relevant to Bittensor) and startup growth. In Aurelius, Coleman’s contributions include organizational leadership, partnerships, and helping shape the rollout of Aurelius’s incentive mechanisms and governance (leveraging his blockchain expertise). His addition to the team signals an emphasis on robust network infrastructure and community engagement. (Source: Coleman’s social bio identifies him as “Cofounder @AureliusAligned… Ex @BabylonLabs_io, @ParityTech, @OriginProtocol”.)

Beyond the founders, the broader Aurelius Labs team and community include Bittensor developers and alignment advisors (not individually named in the public sources). The project actively seeks collaboration with alignment researchers and experienced Bittensor miners/validators. As the subnet grows, the team plans to transition more governance to the community (the Tribunate will evolve into a merit-based body including independent experts). For now, however, strategic direction comes from the core team led by McCaffrey (protocol design/research) and Maher (operations and ecosystem building), with input from early contributors in the Bittensor network.

Aurelius is developed and led by Aurelius Labs, a team with expertise in AI and blockchain. Key identified members include:

Austin McCaffrey – Founder of Aurelius: Austin is the originator of the Aurelius protocol and author of its first whitepaper. He founded Aurelius with the mission of scaling AI alignment via decentralized methods. McCaffrey has been the public face of Aurelius since its launch in September 2025, writing the introduction to Subnet 37 and engaging with the AI safety community to refine the concept. His background prior to Aurelius includes extensive interest in AI alignment research (he’s active on forums like LessWrong), though specific prior roles are not publicly highlighted in the sources. As Aurelius founder, he drives the project’s vision and protocol design, focusing on the intersection of cryptoeconomics and AI safety.

Coleman Maher – Co-founder and COO: Coleman joined Aurelius in late 2025 and took on the role of Chief Operating Officer, working alongside Austin to grow the subnet’s operations. He had initially been an advisor to the project and then came on full-time as a co-founder. Maher brings a strong crypto industry background: he previously held roles at Parity Technologies, Babylon Labs, and Origin Protocol, giving him experience with blockchain networks (Parity is known for Polkadot/Substrate, relevant to Bittensor) and startup growth. In Aurelius, Coleman’s contributions include organizational leadership, partnerships, and helping shape the rollout of Aurelius’s incentive mechanisms and governance (leveraging his blockchain expertise). His addition to the team signals an emphasis on robust network infrastructure and community engagement. (Source: Coleman’s social bio identifies him as “Cofounder @AureliusAligned… Ex @BabylonLabs_io, @ParityTech, @OriginProtocol”.)

Beyond the founders, the broader Aurelius Labs team and community include Bittensor developers and alignment advisors (not individually named in the public sources). The project actively seeks collaboration with alignment researchers and experienced Bittensor miners/validators. As the subnet grows, the team plans to transition more governance to the community (the Tribunate will evolve into a merit-based body including independent experts). For now, however, strategic direction comes from the core team led by McCaffrey (protocol design/research) and Maher (operations and ecosystem building), with input from early contributors in the Bittensor network.

Aurelius has a multi-phase roadmap mapped out from its 2025 launch toward long-term maturity. The development is structured into sequential phases, each with specific objectives and deliverables, as outlined below:

Phase I — Bootstrap (Q3–Q4 2025): Initial launch and protocol MVP. This phase focuses on getting the core system up and running on Bittensor. Key goals include launching the first version of the Aurelius subnet and establishing the basic miner→validator workflow. The team will implement the initial alignment rubric and a bootstrapped Tribunate to manage it. Early miners will begin adversarial evaluations on leading open-source models (e.g. LLaMA, Mistral), and the very first structured dataset of misaligned outputs will be published from those results. Deliverables by end of 2025 include a working reward system for miners/validators, a data pipeline for collecting & storing the misalignment examples, public documentation (docs site, whitepaper) and outreach to initial research partners.

Phase II — Stakeholder Expansion (Q1–Q2 2026): Scaling up participation and real-world use. In this phase, Aurelius aims to grow the network of validators and miners and involve more external experts. Objectives include increasing the diversity and number of active validators, attracting new miners with varied strategies, and inviting independent alignment researchers and nonprofits to help refine the rubric and contribute data. Aurelius will also conduct audits/evaluations of more open-source models and start exploratory collaborations with private AI labs – possibly offering to audit closed-source models under secure settings. Early monetization begins here: the project may produce paid audit reports for model vendors or license parts of the dataset to fund the subnet’s operations. Deliverables expected by mid-2026 are improved validator dashboards and feedback systems, an expanded rubric covering more categories of misalignment, introduction of reputation and slashing mechanisms to enforce validator quality, and onboarding materials to grow the community of contributors.

Phase III — Decentralized Governance (Late 2026): Handing over the reins to the community. By this stage, Aurelius plans to decentralize the governance of its scoring rubric and protocol rules. The Tribunate (or its functions) will transition from a bootstrapped team to a more open, community or contributor-driven body. Possible implementations include on-chain voting or stake-weighted decision systems for updating the alignment rubric. Additionally, Aurelius will formalize a reputation-weighted scoring: validators and Tribunate members with proven track records may carry more influence in consensus, to incentivize sustained high-quality contributions. During this phase the protocol may open up for extensibility – e.g., allowing proposals for new evaluation metrics, new incentive logic, or new model coverage. Deliverables include on-chain governance contracts and proposal mechanisms, a versioning system for rubric updates (with full history for transparency), and public guidelines so that external experts can easily participate in governance.

Phase IV — Integration with AI Development Pipelines (2027+): Productizing Aurelius for AI industry use. Once the platform and community are robust, the focus shifts to integrating Aurelius into mainstream AI development workflows. The idea is to make Aurelius’s alignment checks and data accessible as a service to model builders. Goals for this phase include providing a public API that allows AI developers to submit their models for audit or query the alignment data directly. Aurelius will also develop benchmarking tools and dashboards – for example, a web interface where one can see an alignment “score” for different models or track improvements over model versions. Automation is another theme: model creators might hook Aurelius into their training loop so that new model iterations are automatically red-teamed and adjusted (creating a closed feedback loop for alignment). Deliverables would likely be integration SDKs or plugins, reporting tools that output standardized alignment metrics, and even an “alignment certification” framework to label models as Aurelius-audited/safe.

Phase V — Maturity and Long-Term Vision (Post-2027): Becoming a global AI safety infrastructure. In the final envisioned phase, Aurelius will have evolved into a cornerstone of the AI alignment ecosystem worldwide. The protocol’s datasets and standards should be widely adopted by industry and research. Goals include maintaining open-access alignment data for the public good while also supporting a sustainable economic model (possibly via a DAO managing data licenses and access). Aurelius aims to support cross-border and cross-domain alignment efforts – meaning it can adapt to different cultural norms or regulations and evaluate a variety of AI modalities (text, image, agents, etc.) as needed. Deliverables at this stage could involve broad academic and institutional collaborations, integration of Aurelius evaluation criteria into regulatory compliance regimes or AI governance policies, and a robust global community driving the platform. Ultimately, by post-2027 Aurelius strives to be a standard part of “model release checklists” and policy discussions on AI safety.

Throughout these phases, the roadmap emphasizes iterative growth: first prove the concept (Phase I), then scale participants and use-cases (Phase II), decentralize control (Phase III), embed into industry practice (Phase IV), and finally solidify its role in the AI ecosystem (Phase V). Each milestone builds toward Aurelius’s long-term vision of an open, adversarial, and verifiable alignment process that keeps pace with advancing AI. By 2028 and beyond, if successful, Aurelius will help set transparent safety benchmarks and best practices for AI, acting as a global public resource to ensure AI systems remain aligned with human values at scale.

Aurelius has a multi-phase roadmap mapped out from its 2025 launch toward long-term maturity. The development is structured into sequential phases, each with specific objectives and deliverables, as outlined below:

Phase I — Bootstrap (Q3–Q4 2025): Initial launch and protocol MVP. This phase focuses on getting the core system up and running on Bittensor. Key goals include launching the first version of the Aurelius subnet and establishing the basic miner→validator workflow. The team will implement the initial alignment rubric and a bootstrapped Tribunate to manage it. Early miners will begin adversarial evaluations on leading open-source models (e.g. LLaMA, Mistral), and the very first structured dataset of misaligned outputs will be published from those results. Deliverables by end of 2025 include a working reward system for miners/validators, a data pipeline for collecting & storing the misalignment examples, public documentation (docs site, whitepaper) and outreach to initial research partners.

Phase II — Stakeholder Expansion (Q1–Q2 2026): Scaling up participation and real-world use. In this phase, Aurelius aims to grow the network of validators and miners and involve more external experts. Objectives include increasing the diversity and number of active validators, attracting new miners with varied strategies, and inviting independent alignment researchers and nonprofits to help refine the rubric and contribute data. Aurelius will also conduct audits/evaluations of more open-source models and start exploratory collaborations with private AI labs – possibly offering to audit closed-source models under secure settings. Early monetization begins here: the project may produce paid audit reports for model vendors or license parts of the dataset to fund the subnet’s operations. Deliverables expected by mid-2026 are improved validator dashboards and feedback systems, an expanded rubric covering more categories of misalignment, introduction of reputation and slashing mechanisms to enforce validator quality, and onboarding materials to grow the community of contributors.

Phase III — Decentralized Governance (Late 2026): Handing over the reins to the community. By this stage, Aurelius plans to decentralize the governance of its scoring rubric and protocol rules. The Tribunate (or its functions) will transition from a bootstrapped team to a more open, community or contributor-driven body. Possible implementations include on-chain voting or stake-weighted decision systems for updating the alignment rubric. Additionally, Aurelius will formalize a reputation-weighted scoring: validators and Tribunate members with proven track records may carry more influence in consensus, to incentivize sustained high-quality contributions. During this phase the protocol may open up for extensibility – e.g., allowing proposals for new evaluation metrics, new incentive logic, or new model coverage. Deliverables include on-chain governance contracts and proposal mechanisms, a versioning system for rubric updates (with full history for transparency), and public guidelines so that external experts can easily participate in governance.

Phase IV — Integration with AI Development Pipelines (2027+): Productizing Aurelius for AI industry use. Once the platform and community are robust, the focus shifts to integrating Aurelius into mainstream AI development workflows. The idea is to make Aurelius’s alignment checks and data accessible as a service to model builders. Goals for this phase include providing a public API that allows AI developers to submit their models for audit or query the alignment data directly. Aurelius will also develop benchmarking tools and dashboards – for example, a web interface where one can see an alignment “score” for different models or track improvements over model versions. Automation is another theme: model creators might hook Aurelius into their training loop so that new model iterations are automatically red-teamed and adjusted (creating a closed feedback loop for alignment). Deliverables would likely be integration SDKs or plugins, reporting tools that output standardized alignment metrics, and even an “alignment certification” framework to label models as Aurelius-audited/safe.

Phase V — Maturity and Long-Term Vision (Post-2027): Becoming a global AI safety infrastructure. In the final envisioned phase, Aurelius will have evolved into a cornerstone of the AI alignment ecosystem worldwide. The protocol’s datasets and standards should be widely adopted by industry and research. Goals include maintaining open-access alignment data for the public good while also supporting a sustainable economic model (possibly via a DAO managing data licenses and access). Aurelius aims to support cross-border and cross-domain alignment efforts – meaning it can adapt to different cultural norms or regulations and evaluate a variety of AI modalities (text, image, agents, etc.) as needed. Deliverables at this stage could involve broad academic and institutional collaborations, integration of Aurelius evaluation criteria into regulatory compliance regimes or AI governance policies, and a robust global community driving the platform. Ultimately, by post-2027 Aurelius strives to be a standard part of “model release checklists” and policy discussions on AI safety.

Throughout these phases, the roadmap emphasizes iterative growth: first prove the concept (Phase I), then scale participants and use-cases (Phase II), decentralize control (Phase III), embed into industry practice (Phase IV), and finally solidify its role in the AI ecosystem (Phase V). Each milestone builds toward Aurelius’s long-term vision of an open, adversarial, and verifiable alignment process that keeps pace with advancing AI. By 2028 and beyond, if successful, Aurelius will help set transparent safety benchmarks and best practices for AI, acting as a global public resource to ensure AI systems remain aligned with human values at scale.

Novelty Search is great, but for most investors trying to understand Bittensor, the technical depth is a wall, not a bridge. If we’re going to attract investment into this ecosystem then we need more people to understand it! That’s why Siam Kidd and Mark Creaser from DSV Fund have launched Revenue Search, where they ask the simple questions that investors want to know the answers to.

Mark and Siam sit down with Austin, founder of Aurelius (SN37)—an AI-alignment subnet built on Bittensor. In plain English: training gives models knowledge; alignment adds wisdom. Aurelius tackles the “alignment faking” problem by decentralising how alignment data is created and judged. Miners red-team models to generate high-resolution synthetic alignment data; validators score it against a living “constitution” (beyond simple Helpful-Honest-Harmless), aiming to pierce the model’s latent space and reliably shape behaviour. The goal is to package enterprise-grade, fine-tuning datasets (think safer, less hallucinatory chatbots and agents), publish results, and prove uplift—then sell into enterprises and researchers while exploring a token-gated data marketplace and governance over the evolving constitutions.

They cover why this matters (AGI timelines shrinking, opaque lab pipelines), what’s hard (verifying real inference, building a market), and how BitTensor gives an edge (cheap, diversified data generation vs centralised labs). Near-term: ship a proof-of-concept dataset, harden LLM-as-judge, expand integrations (Shoots/Targon), and stand up public benchmarks (Hugging Face, peer-reviewed studies). Longer-term: Aurelius as a decentralised “alignment watchdog” layer that continuously stress-tests frontier models and nudges them toward human values—so the future’s smartest systems aren’t just powerful, but prudent.

Engine: big

Steering Wheel: small

Brakes: "where we're headed we don't need brakes"

compute: $100B/year vs 100M in AI Safety/year

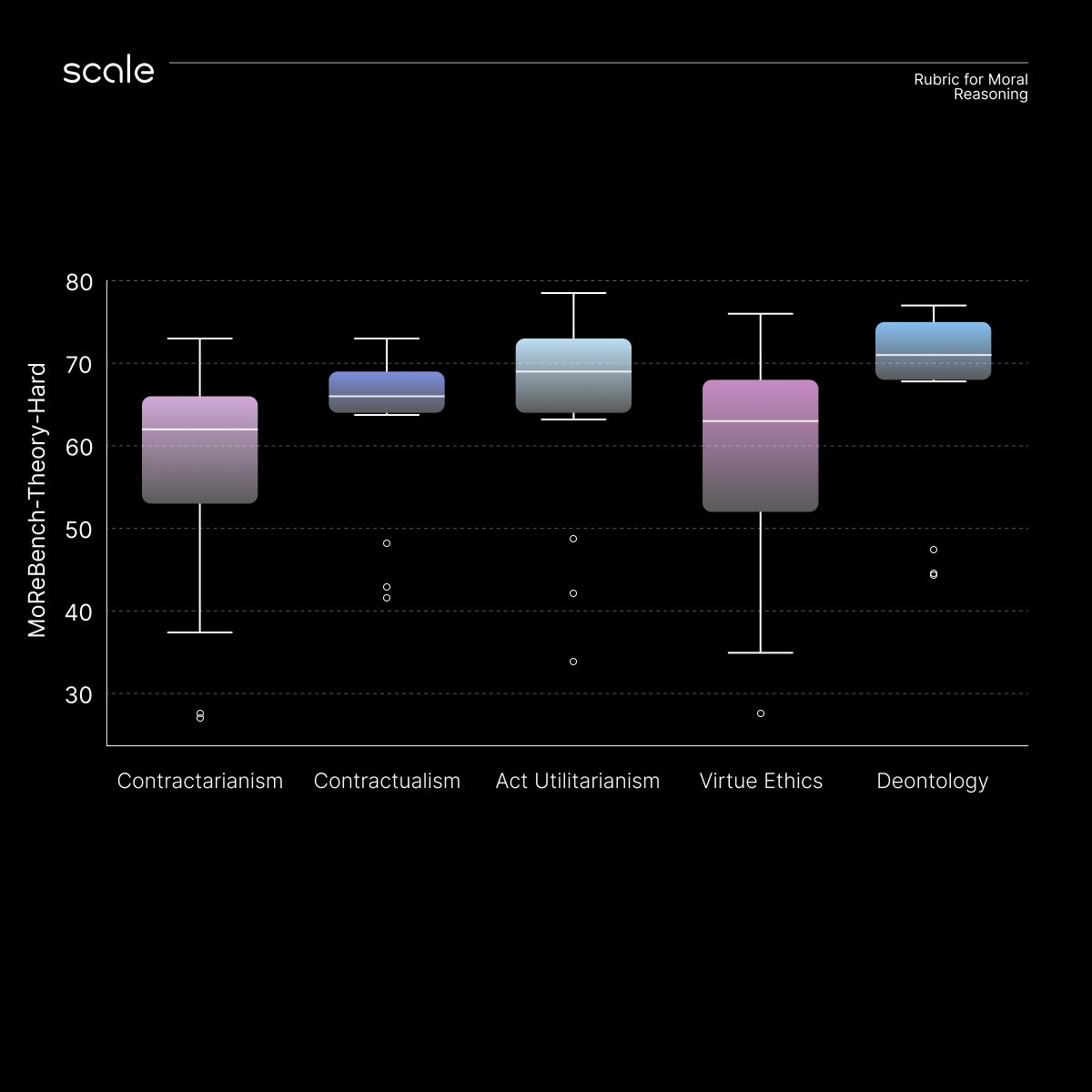

Moral reasoning benchmarks like this validate what we’re building on Aurelius.

Static datasets are a starting point.

Continuous, evolving evaluation is the destination.

Very excited to put this framework to work in a decentralized setting.

...and then make our own.

New Scale research: Do AI models actually reason in ways humans can trust for real-world decisions?

Introducing MoReBench, the first benchmark for procedural moral reasoning in LLMs, measuring not just what models decide, but how they reason through moral ambiguity.

Congrats to @AureliusAligned for being the first subnet to officially claim their subnet profile in our app! 👏

They have updated their subnet profile with details about their team, latest news, what the subnet is incentivizing, and their roadmap.

Haven't downloaded the app

To better understand how we'll build a decentralized alignment platform on Bittensor, check out our updated roadmap.

Today marks the launch of Phase 1⃣

http://x.com/i/article/1997800198115065857

Aurelius is now live on mainnet 🥳🥳

We’re building the missing layer of the AI stack: alignment

decentralized alignment data,

benchmarks,

and red-teaming for LLMs.

Join us 👇

https://github.com/Aurelius-Protocol

More details to follow mainnet launch, specifically:

1. Our roadmap, and what our goals are during Phase 1

2. Our go-to-market strategy, and how we'll build our core business

All while the $TAO halving is upon us, you could almost say it's going to be a big week.

Currently planning on Phase 1 of @AureliusAligned (SN37) going live Tuesday 12/16 at 10AM PST.